Robots, like humans, generally use two different sensory modalities when interacting with the world. There’s exteroceptive perception (or exteroception), which comes from external sensing systems like lidar, cameras, and eyeballs. And then there’s proprioceptive perception (or proprioception), which is internal sensing, involving things like touch, and force sensing. Generally, we humans use both of these sensing modalities at once to move around, with exteroception helping us plan ahead and proprioception kicking in when things get tricky. You use proprioception in the dark, for example, where movement is still totally possible, you just do it slowly and carefully, relying on balance and feeling your way around.

For legged robots, exteroception is what enables them to do all the cool stuff—with really good external sensing and the time (and compute) to do some awesome motion planning, robots can move dynamically and fast. Legged robots are much less comfortable in the dark, however, or really under any circumstances where the exteroception they need either doesn’t come through (because a sensor is not functional for whatever reason) or just totally sucks because of robot-unfriendly things like reflective surfaces or thick undergrowth or whatever. This is a problem because the real world is frustratingly full of robot-unfriendly things.

The research that the Robotic Systems Lab at ETH Zürich has published in Science Robotics showcases a control system that allows a legged robot to evaluate how reliable the exteroceptive information that it’s getting is. When the data are good, the robot plans ahead and moves quickly. But when the dataset seems to be incomplete, noisy, or misleading, the controller gracefully degrades to proprioceptive locomotion instead. This means that the robot keeps moving—maybe more slowly and carefully, but it keeps moving—and eventually, it’ll get to the point where it can rely on exteroceptive sensing again. It’s a technique that humans and animals use, and now robots can use it too, combining speed and efficiency with safety and reliability to handle almost any kind of challenging terrain.

We got a compelling preview of this technique during the DARPA SubT Final Event last fall, when it was being used by Team CERBERUS’ ANYmal legged robots to help them achieve victory. I’m honestly not sure whether the SubT final course was more or less challenging than some mountain climbing in Switzerland, but the performance in the video below is quite impressive, especially since ANYmal managed to complete the uphill portion of the hike four minutes faster than the suggested time for an average human.

Learning robust perceptive locomotion for quadrupedal robots in the wild

www.youtube.com

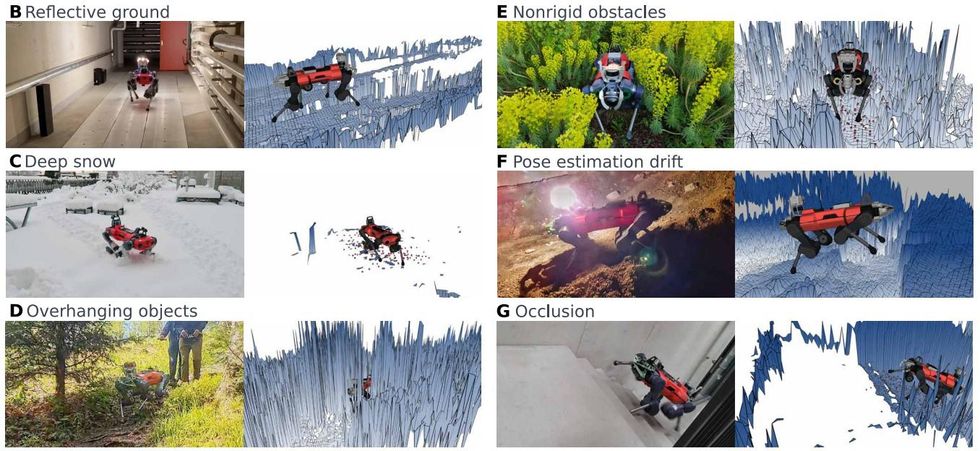

Those clips of ANYmal walking through dense vegetation and deep snow do a great job of illustrating how well the system functions. While the exteroceptive data is showing obstacles all over the place and wildly inaccurate ground height, the robot knows where its feet are, and relies on that proprioceptive data to keep walking forward safely and without falling. Here are some other examples showing common problems with sensor data that ANYmal is able to power through:

Other legged robots do use proprioception for reliable locomotion, but what’s unique here is this seamless combination of speed and robustness, with the controller moving between exteroception and proprioception based on how confident it is about what it’s seeing. And ANYmal’s performance on this hike, as well as during the SubT Final, is ample evidence of how well this approach works.

For more details, we spoke with first author Takahiro Miki, a PhD student in the Robotic Systems Lab at ETH Zürich and first author on the paper.

IEEE Spectrum: The paper’s intro says «until now, legged robots could not match the performance of animals in traversing challenging real-world terrain.» Suggesting that legged robots can now «match the performance of animals» seems very optimistic.hat makes you comfortable with that statement?

Takahiro Miki: Achieving a level of mobility similar to animals is probably the goal for many of us researchers in this area. However, robots are still far behind nature and this paper is only a tiny step in this direction.

Your controller enables robust traversal of «harsh natural terrain.» What does «harsh» mean, and can you describe the kind of terrain that would be in the next level of difficulty beyond «harsh»?

Miki: We aim to send robots to places that are too dangerous or difficult to reach for humans. In this work, by “harsh”, we mean the places that are hard for us not only for robots. For example, steep hiking trails or snow-covered trails that are tricky to traverse. With our approach, the robot traversed steep and wet rocky surfaces, dense vegetation, or rough terrain in underground tunnels or natural caves with loose gravels at human walking speed.

We think the next level would be somewhere which requires precise motion with careful planning such as stepping stones, or some obstacles that require more dynamic motion, such as jumping over a gap.

How much do you think having a human choose the path during the hike helped the robot be successful?

Miki: The intuition of the human operator choosing a feasible path for the robot certainly helped the robot’s success. Even though the robot is robust, it cannot walk over obstacles which are physically impossible, e.g., obstacles bigger than the robot or cliffs. In other scenarios such as during the DARPA SubT Challenge however, a high-level exploration and path planning algorithm guides the robot. This planner is aware of the capabilities of the locomotion controller and uses geometric cues to guide the robot safely. Achieving this for an autonomous hike in a mountainous environment, where a more semantic environment understanding is necessary, is our future work.

What impressed you the most in terms of what the robot was able to handle?

Miki: The snow stairs were the very first experiment we conducted outdoors with the current controller, and I was surprised that the robot could handle the slippery snowy stairs. Also during the hike, the terrain was quite steep and challenging. When I first checked the terrain, I thought it might be too difficult for the robot, but it could just handle all of them. The open stairs were also challenging due to the difficulty of mapping. Because the lidar scan passes through the steps, the robot couldn’t see the stairs properly. But the robot was robust enough to traverse them.

At what point does the robot fall back to proprioceptive locomotion? How does it know if the data its sensors are getting are false or misleading? And how much does proprioceptive locomotion impact performance or capabilities?

Miki: We think the robot detects if the exteroception matches the proprioception through its feet contact or feet positions. If the map is correct, the feet get contact where the map suggests. Then the controller recognizes that the exteroception is correct and makes use of it. Once it experiences that the feet contact doesn’t match with the ground on the map, or the feet go below the map, it recognizes that exteroception is unreliable, and relies more on proprioception. We showed this in this supplementary video experiment:

Supplementary Robustness Evaluation

youtu.be

However, since we trained the neural network in an end-to-end manner, where the student policy just tries to follow the teacher’s action by trying to capture the necessary information in its belief state, we can only guess how it knows. In the initial approach, we were just directly inputting exteroception into the control policy. In this setup, the robot could walk over obstacles and stairs in the lab environment, but once we went outside, it failed due to mapping failures. Therefore, combining with proprioception was critical to achieve robustness.

How much are you constrained by the physical performance of the robot itself? If the robot were stronger or faster, would you be able to take advantage of that?

Miki: When we use reinforcement learning, the policy usually tries to use as much torque and speed as it is allowed to use. Therefore if the robot was stronger or faster, we think we could increase robustness further and overcome more challenging obstacles with faster speed.

What remains challenging, and what are you working on next?

Miki: Currently, we steered the robot manually for most of the experiments (except DARPA SubT Challenge). Adding more levels of autonomy is the next goal. As mentioned above, we want the robot to complete a difficult hike without human operators. Furthermore, there is big room for improvements in the locomotion capability of the robot. For “harsher” terrains, we want the robot to perceive the world in 3D and manifest richer behaviors such as jumping over stepping stones or crawling under overhanging obstacles, which is not possible with current 2.5D elevation map.

Source: IEEE Spectrum Robotics