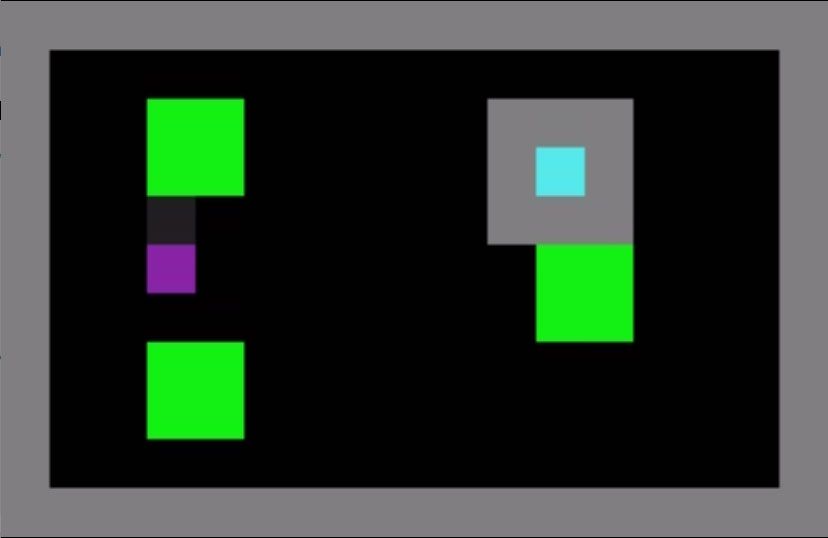

The virtual creatures played two games in which they collectively navigate a two-dimensional world to gather apples. In Harvest, apples grow faster when more apples are nearby, so when they’re all gone, they stop appearing. Coordinated restraint is required. (In soccer, if everyone on your team runs toward the ball, you’ll lose.) In Cleanup, apples stop growing if a nearby aquifer isn’t continuously cleaned. (A team needs both offense and defense.)

The creatures relied on a form of AI called reinforcement learning, in which an algorithm uses trial and error, and gains rewards for better performance. In this work, each creature earned rewards not only for collecting apples, but also for altering the choices of other players—whether that helped or hurt the others.

In one experiment, the creatures estimated their influence using something like humans’ “theory of mind”—the ability to understand others’ thoughts. Through observation, they learned to predict the behavior of others. They could then predict what neighbors would do in response to one action versus another, using counterfactual or “what-if” reasoning. If a particular action would change their neighbors’ behaviors more than other possible actions, it was deemed more influential and thus more desirable.

The researchers added up the number of apples gathered by all the critters. The population performed better when individuals were rewarded for influence than when they weren’t. They even outperformed unselfish populations in which creatures received extra rewards during training if inequality within the group (measured by the number of apples each critter collected) remained low. Apparently getting an intrinsic reward for others’ wellbeing will take coordination only so far, without counterfactual reasoning to tell you if your actions are directly responsible for others’ behavior.

Critters weren’t just nudging each other to or away from apples. The researchers found that the critters were using actions to send messages to each other, analogous to a “bee waggle dance.” In another experiment, researchers gave the creatures the additional ability to broadcast messages without moving. Again, the group scored higher when motivated to influence each other. What’s more, creatures that were easily influenced—good listeners—collected more apples than those that weren’t.

The research is “really neat,” says Ryan Lowe, a computer scientist at McGill University, in Montreal, who studies AI and coordination but was uninvolved in the work. Adding an impetus for influence is “kind of intuitive,” he says, “but sometimes intuitive things don’t work.”

In these experiments, selfish status-seeking led to cooperation, but in other situations it could potentially lead to harmful manipulation (by picture marketers, dictators, or bad friends). That’s why Natasha Jaques, a computer scientist at the Massachusetts Institute of Technology, in Cambridge, who spearheaded the work during an internship at Alphabet’s DeepMind, in London, wants to combine a drive for sway with one for largesse. Eventual applications could include autonomous vehicles, warehouse robots, or household helpers, she says: “Anything where you want a robot to coordinate with other robots or with humans.” In the meantime, she’s eager to try more complex games—including robot soccer.