Neural networks that imitate the workings of the human brain now often generate art, power computer vision, and drive many more applications. Now a neural network microchip from China that uses photons instead of electrons, dubbed Taichi, can run AI tasks as well as its electronic counterparts with a thousandth as much energy, according to a new study.

AI typically relies on artificial neural networks in applications such as analyzing medical scans and generating images. In these systems, circuit components called neurons—analogous to neurons in the human brain—are fed data and cooperate to solve a problem, such as recognizing faces. Neural nets are dubbed “deep“ if they possess multiple layers of these neurons.

“Optical neural networks are no longer toy models. They can now be applied in real-world tasks.” —Lu Fang, Tsinghua University, Beijing

As neural networks grow in size and power, they are becoming more energy hungry when run on conventional electronics. For instance, to train its state-of-the-art neural network GPT-3, a 2022 Nature study suggested OpenAI spent US $4.6 million to run 9,200 GPUs for two weeks.

The drawbacks of electronic computing have led some researchers to investigate optical computing as a promising foundation for next-generation AI. This photonic approach uses light to perform computations more quickly and with less power than an electronic counterpart.

Now scientists at Tsinghua University in Beijing and the Beijing National Research Center for Information Science and Technology have developed Taichi, a photonic microchip that can perform as well as electronic devices on advanced AI tasks while proving far more energy efficient.

“Optical neural networks are no longer toy models,” says Lu Fang, an associate professor of electronic engineering at Tsinghua University. “They can now be applied in real-world tasks.”

How does an optical neural net work?

Two strategies for developing optical neural networks either scatter light in specific patterns within the microchips, or get light waves to interfere with each other in precise ways inside the devices. When input in the form of light flows into these optical neural networks, the output light encodes data from the complex operations performed within these devices.

Both photonic computing approaches have significant advantages and disadvantages, Fang explains. For instance, optical neural networks that rely on scattering, or diffraction, can pack many neurons close together and consume virtually no energy. Diffraction-based neural nets rely on coherent diffraction of light beams as they pass through optical layers that represent the network’s operations. One drawback of diffraction-based neural nets, however, is that they cannot be reconfigured. Each string of operations can essentially only be used for one specific task.

Taichi boasts 13.96 million parameters.

In contrast, optical neural networks that depend on interference can readily be reconfigured. Interference-based neural nets use superpositions of multiple coherent beams to perform their operations. However, their drawback concerns the fact that interferometers are also bulky, which restricts how well such neural nets can scale up. They also consume a lot of energy.

In addition, current photonic chips experience unavoidable errors. Attempting to scale up optical neural networks by increasing the number of neuron layers in these devices typically only exponentially increase this inevitable noise. Which means that, until now, optical neural networks were limited to basic AI tasks such as simple pattern recognition. Optical neural nets, in other words, were generally not suitable for advanced, real-world applications, Fang says.

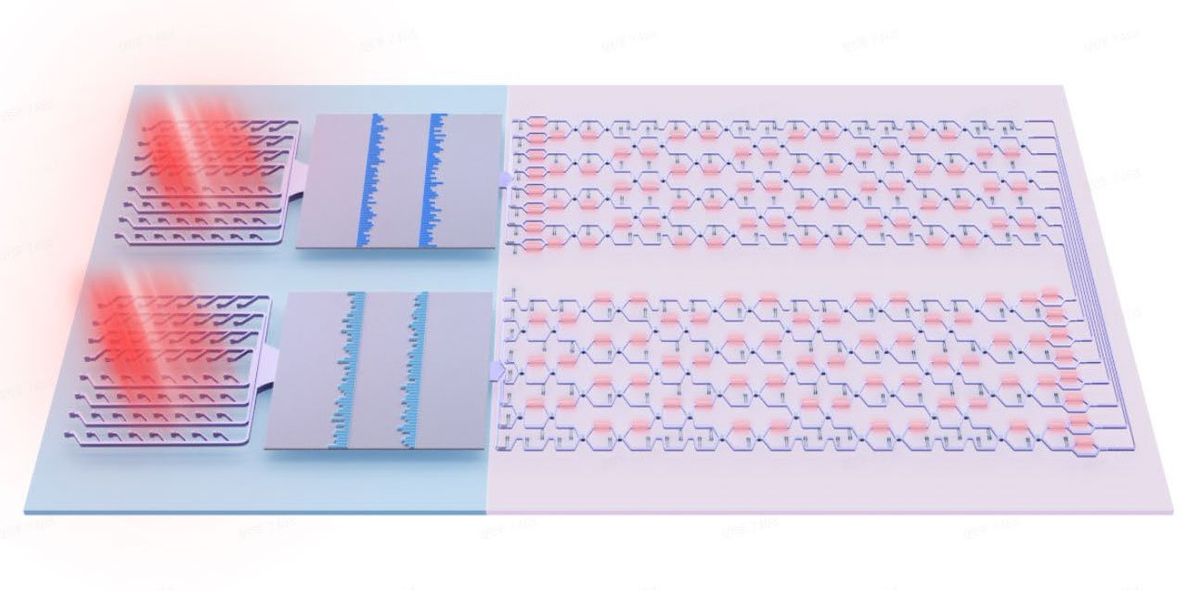

The researchers say that Taichi, by contrast, is a hybrid design that combines both diffraction and interference approaches. It contains clusters of diffractive units that can compress data for large-scale input and output in a compact space. But their chip also contains arrays of interferometers for reconfigurable computation. The encoding protocol developed for Taichi divides challenging tasks and large network models into sub-problems and sub-models that can be distributed across different modules, Fang says.

How does Taichi blend both kinds of neural nets?

Previous research typically sought to expand optical neural network capacity by mimicking what is often done with their electronic counterparts—increasing the number of neuron layers. Instead, Taichi’s architecture scales up by distributing computing across multiple chiplets that operate in parallel. This means Taichi can avoid the problem of exponentially accumulating errors that happens when optical neural networks stack many neuron layers together.

“This ‘shallow in depth but broad in width’ architecture guarantees network scale,” Fang says.

Taichi produced music clips in the style of Bach and art in the style of Van Gogh and Munch.

For instance, previous optical neural networks usually only possessed thousands of parameters—the connections between neurons that mimic the synapses linking biological neurons in the human brain. In contrast, Taichi boasts 13.96 million parameters.

Previous optical neural networks were often limited to classifying data along just a dozen or so categories—for instance, figuring out whether images represented one of 10 digits. In contrast, in tests with the Omniglot database of 1,623 different handwritten characters from 50 different alphabets, Taichi displayed an accuracy of 91.89 percent, comparable to its electronic counterparts.

The scientists also tested Taichi on the advanced AI task of content generation. They found it could produce music clips in the style of Johann Sebastian Bach and generate images of numbers and landscapes in the style of Vincent Van Gogh and Edvard Munch.

All in all, the researchers found Taichi displayed an energy efficiency of up to roughly 160 trillion operations per second per watt and an area efficiency of nearly 880 trillion multiply-accumulate operations (the most basic operation in neural networks) per square millimeter. This makes it more than 1,000 times more energy efficient than one of the latest electronic GPUs, the NVIDIA H100, as well as roughly 100 times more energy efficient and 10 times more area efficient than previous other optical neural networks.

Although the Taichi chip is compact and energy-efficient, Fang cautions that it relies on many other systems, such as a laser source and high-speed data coupling. These other systems are far more bulky than a single chip, “taking up almost a whole table,” she notes. In the future, Fang and her colleagues aim to add more modules onto the chips to make the whole system more compact and energy-efficient.

The scientists detailed their findings online 11 April in the journal Science.

Source: IEEE Semiconductors